The Challenge#

Large language models require internet connectivity and expensive cloud APIs. Users need offline, efficient document Q&A systems.

The Solution#

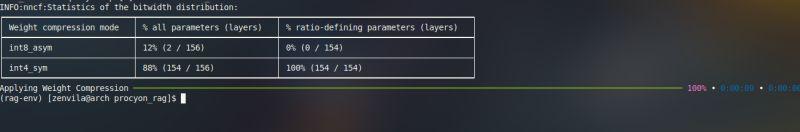

Built a fully Dockerized RAG system using quantized LLaMA 3.1 8B (INT4) that runs entirely offline, answering questions from PDF documents.

Key Achievement#

Achieved 70% reduction in model size while maintaining accuracy, enabling deployment on modest hardware without internet dependency.

Technologies Used#

Python, OpenVINO, NNCF, Docker, RAG, Vector Databases, LLMs